Apple has been granted a patent (number 11561621 B2) for a “multimedia computing or entertainment system for responding to user presence and activity.” It hints at future Macs, iPads, and a HomePod with a built-in display that respond to gestures, as well as a user’s body movements.

About the patent

In the patent Apple says that tTraditional user interfaces for computers and multi-media systems aren’t ideal for a number of applications and are not sufficiently intuitive for many other applications. In a professional context, providing stand-up presentations or other types of visual presentations to large audiences is one example where controls are less than ideal and, in the opinion of many users, insufficiently intuitive, according to the tech giant.

In a personal context, gaming control and content viewing/listening are but two of many examples. In the context of an audio/visual presentation, the manipulation of the presentation is generally upon the direction of a presenter that controls an intelligent device (e.g. a computer) through use of remote control devices. Similarly, gaming and content viewing/listening also generally rely upon remote control devices.

Apple says such devices often suffer from inconsistent and imprecise operation or require the cooperation of another individual, as in the case of a common presentation. Some devices, for example in gaming control, use a fixed location tracking device (e.g., a trackball or joy-stick), a hand cover (aka, glove), or body-worn/held devices having incorporated motion sensors such as accelerometers. Traditional user interfaces including multiple devices such as keyboards, touch/pads/screens, pointing devices (e.g. mice, joysticks, and rollers), require both logistical allocation and a degree of skill and precision, but, according to Apple, can often more accurately reflect a user’s expressed or implied desires. The equivalent ability to reflect user desires is more difficult to implement with a remote control system.

The tech giant says when a system has an understanding of its users and the physical environment surrounding the user, the system can better approximate and fulfill user desires, whether expressed literally or impliedly. For example, a system that approximates the scene of the user and monitors the user activity can better infer the user’s desires for particular system activities.

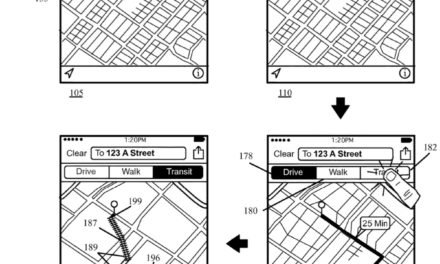

In addition, a system that understands context can better interpret express communication from the user such as communication conveyed through gestures. As an example, Apple says that gestures have the potential to overcome these drawbacks regarding user interface through conventional remote controls. Gestures have been studied as a promising technology for man-machine communication.

Various methods have been proposed to locate and track body parts (e.g., hands and arms) including markers, colors, and gloves. Current gesture recognition systems often fail to distinguish between various portions of the human hand and its fingers.

Apple says that many easy-to-learn gestures for controlling various systems can be distinguished and utilized based on specific arrangements of fingers. However, current techniques fail to consistently detect the portions of fingers that can be used to differentiate gestures, such as their presence, location and/or orientation by digit. Apple wants to change this.

Summary of the patent

Here’s Apple’s abstract of the patent: “Intelligent systems are disclosed that respond to user intent and desires based upon activity that may or may not be expressly directed at the intelligent system. In some embodiments, the intelligent system acquires a depth image of a scene surrounding the system. A scene geometry may be extracted from the depth image and elements of the scene may be monitored. In certain embodiments, user activity in the scene is monitored and analyzed to infer user desires or intent with respect to the system.

“The interpretation of the user’s intent as well as the system’s response may be affected by the scene geometry surrounding the user and/or the system. In some embodiments, techniques and systems are disclosed for interpreting express user communication, e.g., expressed through hand gesture movements. In some embodiments, such gesture movements may be interpreted based on real-time depth information obtained from, e.g., optical or non-optical type depth sensors.”

Article provided with permission from AppleWorld.Today