As noted by 9to5Mac, Apple has announced a new artificial intelligence tool dubbed Apple Keyframes that can enable anyone to create animations.

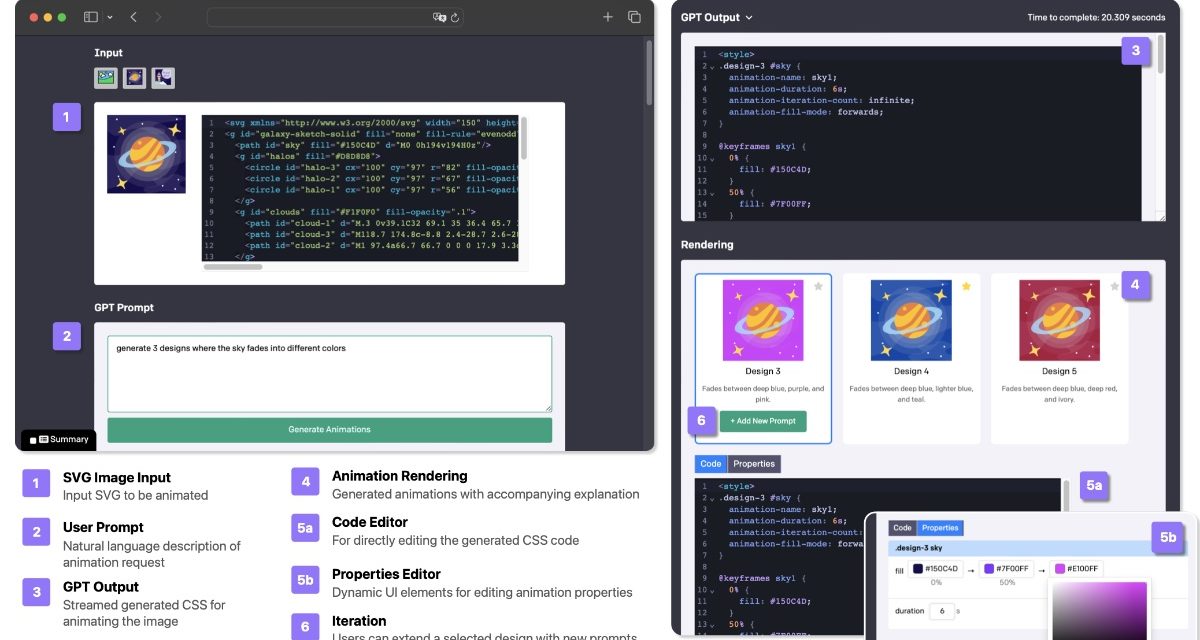

Here’s how Apple describes the tool: “Keyframer is an large language model (LLM)-powered animation prototyping tool that can generate animations from static images (SVGs). Users can iterate on their design by adding prompts and editing LLM-generated CSS animation code or properties. Additionally, users can request design variants to support their ideation and exploration.

“While one-shot prompting interfaces are common in commercial text-to-image systems like Dall·E and Midjourney, we argue that animations require a more complex set of user considerations, such as timing and coordination, that are difficult to fully specify in a single prompt—thus, alternative approaches that enable users to iteratively construct and refine generated designs may be needed especially for animations.

“We combined emerging design principles for language-based prompting of design artifacts with code-generation capabilities of LLMs to build a new AI-powered animation tool called Keyframer. With Keyframer, users can create animated illustrations from static 2D images via natural language prompting. Using GPT-4 3, Keyframer generates CSS animation code to animate an input Scalable Vector Graphic (SVG).”

On February 7, Apple released a new open-source AI model, called “MGIE,” that can edit images based on natural language instructions. MGIE, which stands for MLLM-Guided Image Editing, leverages multimodal large language models (MLLMs) to interpret user commands and perform pixel-level manipulations, according to VentureBeat.

The model can handle various editing aspects, such as Photoshop-style modification, global photo optimization, and local editing. VentureBeat notes that MGIE is the result of a collaboration between Apple and researchers from the University of California, Santa Barbara.

Article provided with permission from AppleWorld.Today