There’s an old saying that “the camera never lies.” However, with modern technology and image tricks such as deepfakes, that’s no longer true. However, Apple has been granted a patent (number 11,335,094) for “detecting fake videos” — at least in regards to using Face ID on its various devices.

About the patent

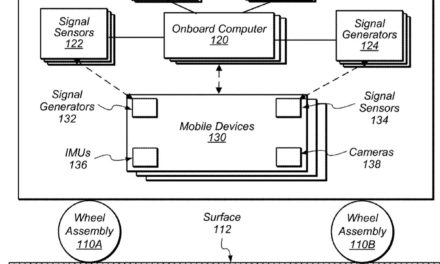

Systems such as Face ID rely upon facial recognition to perform authentication. Authorization requires a person to present their face to a camera in order to verify that the person’s face matches that of an authorized user.

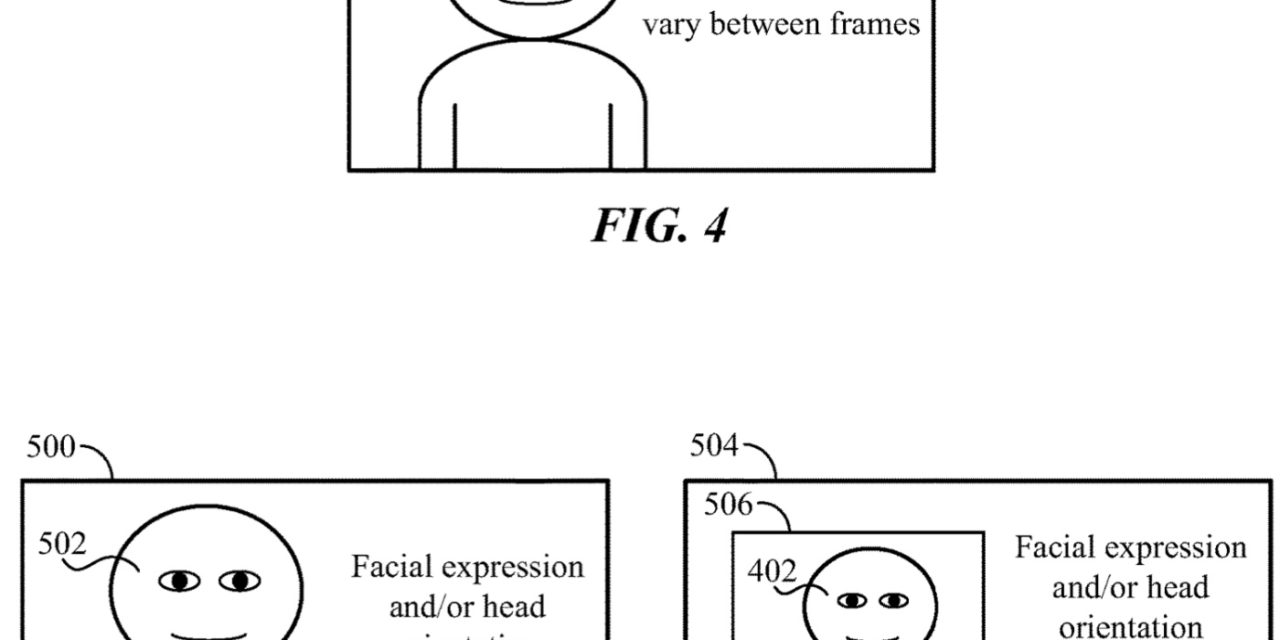

However, Apple says that an unscrupulous person may attempt to gain unauthorized access to a computer system that uses such a facial recognition-based authentication process by holding up a life-size picture of the authorized user’s face.

Recognizing that the facial image is not a live image of the authorized user being captured in real time may be difficult. If the authentication process simply grants access based on recognizing the face of the authorized user, then access may mistakenly be granted to an unauthorized party.

However, Apple says that machine-learning techniques, including neural networks, may be applied to some computer vision problems. Neural networks may be used to perform tasks such as image classification, object detection, and image segmentation.

Neural networks have been trained to classify images using large datasets that include millions of images with ground truth labels. Apple wants to include this technology in devices such as the iPhone, iPad, and, perhaps eventually, the Mac.

Summary of the patent

Here’s Apple’s abstract of the patent: “In one embodiment, a method includes accessing a plurality of verified videos depicting one or more subjects, generating, based on the verified videos, a plurality of verified-video feature values corresponding to the subjects, generating, using one or more video transformations based on the verified videos, a plurality of fake videos, generating, based on the fake videos, a plurality of fake-video feature values, training a machine-learning model to determine whether a specified video is a genuine video, wherein the machine-learning model is trained based on the verified-video feature values and the fake-video feature values. The machine-learning model may be trained to classify the specified video in a genuine-video class or a fake-video class, and the machine-learning model maybe trained based on an association between a genuine-video class and the verified-video feature values and an association between a fake-video class and the fake-video feature values.”

Article provided with permission from AppleWorld.Today