Apple has been granted a patent (number 11,138,798) for “devices, methods, and graphical user interfaces for displaying objects in 3D contexts. The goal is to beef up the augmented reality features of the iPhone and iPad even more.

About the patent

In the patent data, Apple notes that the development of computer systems for augmented reality has increased significantly in recent years. Example AR environments include at least some virtual elements that replace or augment the physical world. Input devices, such as touch-sensitive surfaces, for computer systems and other electronic computing devices are used to interact with virtual/augmented reality environments. Examples of touch-sensitive surfaces include touchpads, touch-sensitive remote controls, and touch-screen displays. Such surfaces are used to manipulate user interfaces and objects therein on a display. Example user interface objects include digital images, video, text, icons, and control elements such as buttons and other graphics.

However, Apple says that methods and interfaces for interacting with environments that include at least some virtual elements are “cumbersome, inefficient, and limited.” Examples, per the tech giant, are:

° Systems that provide insufficient feedback for performing actions associated with virtual objects;

° Systems that require a series of inputs to generate virtual objects suitable for display in an augmented reality environment;

° Systems that require lack handling for manipulating sets of virtual objects are tedious, create a significant cognitive burden on a user, and detract from the experience with the virtual/augmented reality environment.

What’s more, Apple says these methods take longer than necessary, thereby wasting energy. The company wants to overcome such limitations.

Summary of the patent

Here’s Apple’s abstract of the patent: “An electronic device displays an environment that includes a virtual object that is associated with a first action that is triggered based on satisfaction of a first set of criteria. In response to a first input detected while the environment is displayed: the first action is performed in accordance with a determination that the first input satisfies the first set of criteria, and, in accordance with a determination that the first input does not satisfy the first set of criteria but instead satisfies a second set of criteria, the first action is not performed and instead a first visual indication of one or more inputs that if performed would cause the first set of criteria to be satisfied is displayed.”

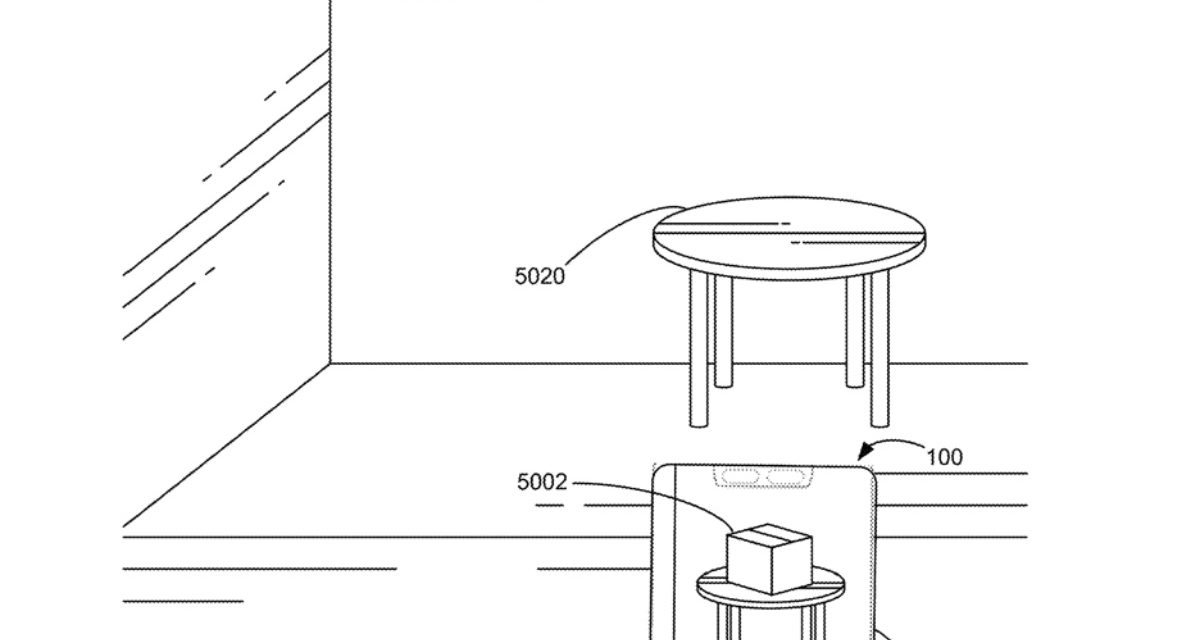

The accompanying graphic is an exam

Article provided with permission from AppleWorld.Today