Apple has filed for a patent (number US 20230334907 A1) for “emotion detection.” . It involves the company’s Animoji and Memoji features.

Animojis allow a user to choose an avatar (e.g., a puppet) to represent themselves. The Animoji can move and talk as if it were a video of the user. Animojis enable users to create personalized versions of emojis in a fun and creative way, and Memoji is the name used for Apple’s personalized “Animoji” characters that can be created and customized right within Messages by choosing from a set of inclusive and diverse characteristics to form a unique personality.

About the patent filing

In the patent filing Apple notes that computerized characters that represent and are controlled by users are commonly referred to as avatars. Avatars may take a wide variety of forms including virtual humans, animals, and plant life. Some computer products include avatars with facial expressions that are driven by a user’s facial expressions.

One use of facially-based avatars is in communication, where a camera and microphone in a first device transmits audio and real-time 2D or 3D avatar of a first user to one or more second users such as other mobile devices, desktop computers, videoconferencing systems and the like.

Apple says that known existing systems tend to be computationally intensive, requiring high-performance general and graphics processors, and generally do not work well on mobile devices, such as smartphones or computing tablets. What’s more, current existing avatar systems generally don’t provide the ability to communicate nuanced facial representations or emotional states.

Apple wants the cameras on Macs, iPhones, and iPads to be able to interact with Memoji and Animoji to show the emotions of their real-life counterparts.

Summary of the patent filing

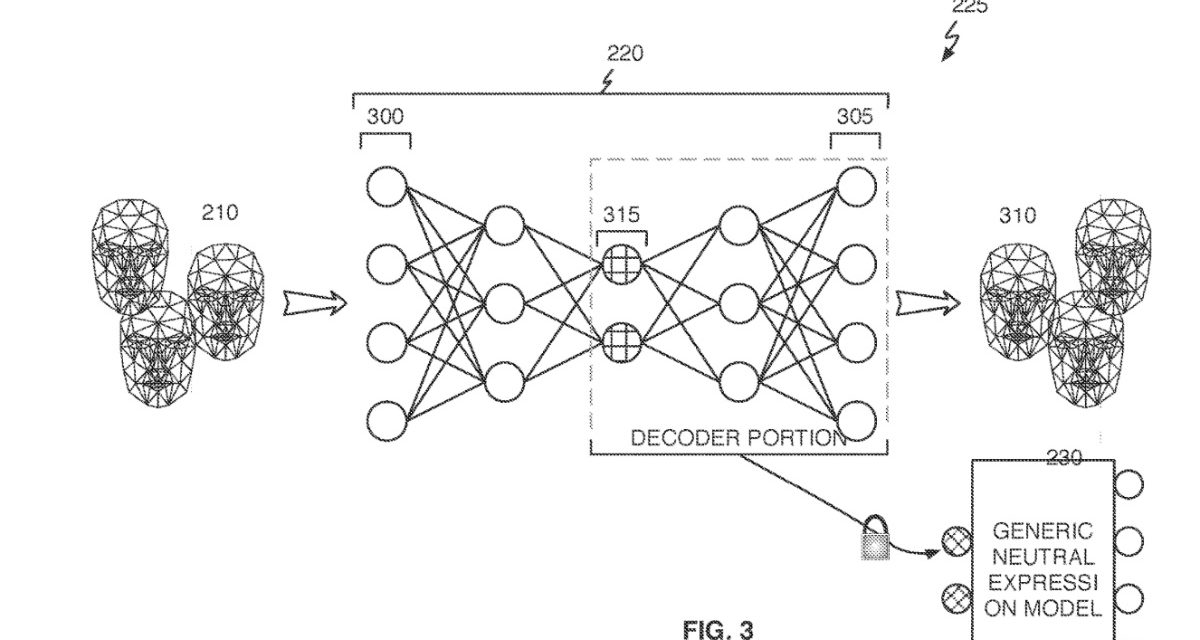

Here’s Apple’s abstract of the patent filing: “Estimating emotion may include obtaining an image of at least part of a face, and applying, to the image, an expression convolutional neural network (“CNN”) to obtain a latent vector for the image, where the expression CNN is trained from a plurality of pairs each comprising a facial image and a 3D mesh representation corresponding to the facial image. Estimating emotion may further include comparing the latent vector for the image to a plurality of previously processed latent vectors associated with known emotion types to estimate an emotion type for the image.”

Article provided with permission from AppleWorld.Today