Apple has filed for a patent (number 20220019335) that involves manipulating 3D objects on the screen of a Mac, iPhone, or iPad.

About the patent filing

In the patent filing, Apple notes that programming a computer-generated reality (CGR) application can be a difficult and time-consuming process. This requires expert knowledge in, for example, 3D object design and application coding so “presents a high barrier to the generation of quality CGR applications.”

Apple says that, in particular, placing one or more CGR objects at desired locations and/or orientations in a CGR scene can be a cumbersome and/or confusing process. The company wants to make this process more accessible to folks beyond programmers.

Apple’s idea is for electronic devices with faster, more efficient methods and interfaces for spatially manipulating three-dimensional objects (such as CGR objects) using a two-dimensional screen. The company says such methods and interfaces can complement or replace conventional methods for spatially manipulating three-dimensional objects using a two-dimensional screen.

Summary of the patent filing

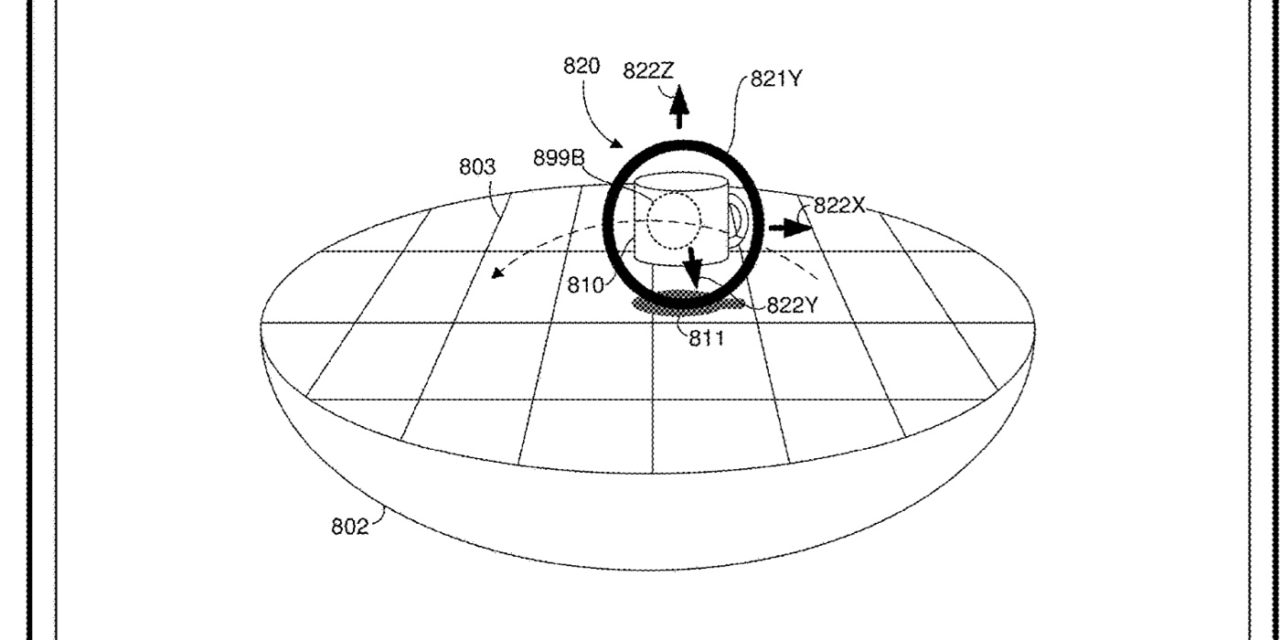

Here’s Apple’’s abstract of the patent filing if you want the technical details: “Various implementations disclosed herein include a method performed by a device. The method includes displaying a three-dimensional object in a three-dimensional space. The method includes displaying a spatial manipulation user interface element including a set of spatial manipulation affordances respectively associated with a set of spatial manipulations of the three-dimensional object.

“Each of the set of spatial manipulations corresponds to a translational movement of the three-dimensional object along a corresponding axis of the three-dimensional space. The method includes detecting a first user input directed to a first spatial manipulation affordance of the spatial manipulation affordance. The first spatial manipulation affordance is associated with a first axis of the three-dimensional space. The method includes, in response to detecting the first user input directed to the first spatial manipulation affordance, translationally moving the three-dimensional object along the first axis of the three-dimensional space.”

Article provided with permission from AppleWorld.Today