An Apple patent (number 20120166192) for providing text input using speech data and non-speech data has appeared at the U.S. Patent & Trademark Office. It apparently involves the Dictation feature on the new iPad and the upcoming Mountain Lion operating system, as well as Siri on the iPhone 4S.

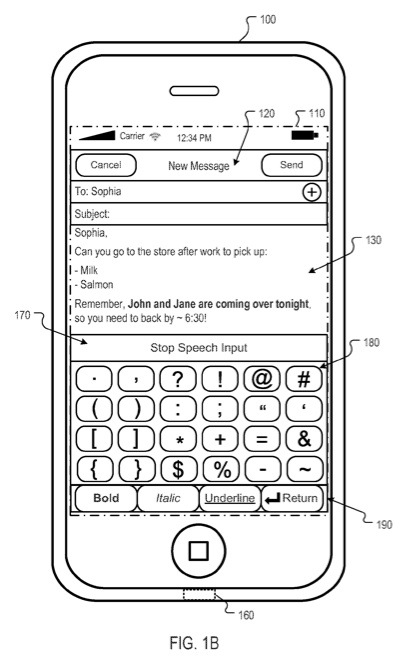

Per the patent, the interface can receive speech input and non-speech input from a user through a user interface. The speech input can be converted to text data and the text data can be combined with the non-speech input for presentation to a user.

Here’s Apple’s background and summary of the invention: “Keyboards or keypads are often used to input text into computing devices. However, some software solutions enable the user to enter text data using speech. These software solutions convert the speech to text using speech recognition engines. However, these software solutions can be difficult to use when entering symbolic characters, style or typeface input because they typically require escape sequences to exit a speech input mode and then additional input to return to speech input mode.

“The disclosed implementations are directed to systems, methods, and computer readable media for providing an editor that can receive speech and non-speech input. Example systems can include an interface, a speech recognition module, and a text composition module. The interface can receive speech data and non-speech data from a mobile device, the speech data and non-speech data including sequence information. The speech recognition module can analyze the speech data to derive text data, the text data comprising sequence information associated with each of a plurality of words associated with the speech data.

“The text composition module can receive the text data and combine the text data with the non-speech data based upon the sequence information. The text composition module can thereby produce combined text data derived from the text data and the non-speech data. The interface can transmit the combined text data to the mobile device for presentation to a user of the mobile device.

[0005] Example methods for providing a text editor can include: receiving speech input and non-speech input from a user, the speech input and non-speech input comprising respective sequence indicators; providing the speech input and non-speech input to a speech to text composition server; receiving text data from the speech to text composition server, the text data comprising a textual representation of the provided speech input combined with the provided non-speech input; and presenting the text data to the user.

“Example: text editors can include a non-speech text editing environment and a speech editing environment. The non-speech editing environment can be displayed during a non-speech editing mode, and can receive keyboard data from a user and can present text data related to the keyboard input to the user. The non-speech editing environment also includes a first escape sequence to enter an speech input mode.

“The speech editing environment can be displayed during the speech input mode. The speech editing environment can receive speech input and non-speech input from the user, and can present text data derived from the speech input and non-speech input to the user. The speech editing environment can include a second escape sequence used to resume the non-speech editing mode.

“Other implementations are disclosed, including implementations directed to systems, methods, apparatuses, computer-readable mediums and user interfaces.”

Kazhhisa Yanagihara is the inventor.

Also appearing at the U.S. Patent & Trademark Office are:

° Patent numbers 20120162095 and 20120162751 for an internal optical coating for an electronic device display. Per the patent, an internal optical coating includes multiple layers of different materials and thicknesses and is disposed between a transparent display cover and a visual display unit for an electronic device display. The inventors are Frank F. Liang, Amaury J. Heresztyn, Philip L. Mort, Carl Peterson, Benjamin M. Rappoport and John P. Ternus.

° Patent number 20120162156 for a system and method to improve image edge discoloration in a display. The inventors are Cheng Chen, Shih Chang Chang, Mingxia Gu and John Z. Zhong.

° Patent number 20120160079 for musical systems and methods. Per the patent, musical performance/input systems, methods, and products can accept user inputs via a user interface, generate, sound, store, and/or modify one or more musical tones. The inventors are Alexander Harry Little and Eli T. Manjarrez.

° Patent number 20120166429 is for using statistical language models for contextual look-up. The inventors are Jennifer Lauren Moore, Brent D. Ramerth and Douglas R. Davidson.

° Patent number 20120166765 is for predicting branches for vector partitioning loops when processing vector instructions. Jeffry E. Gonion is the inventor.

° Patent number 20120166942 is for using parts-of-speech tagging and named entity recognition for spelling correction. Techniques to automatically correct or complete text are disclosed. Brent R. Ramerth, Douglas R. Davidson and Jennifer Lauren Moore.

° Patent number 20120166981 is for concurrently displaying a drop zone editor for menu editor during the creation of a multimedia device. The inventors are Ralf Weber, Adrian Diaconu, Timothy B. Martin, William M. Bachman, Thomas M. Alsian, Reed E. Martin and Matt Evans.

° Patent number 20120167009 is for combining timing and geometry information for typing correction. Techniques to automatically correct or complete text are disclosed. The inventors are Douglas R. Davidson and Karan Misra.