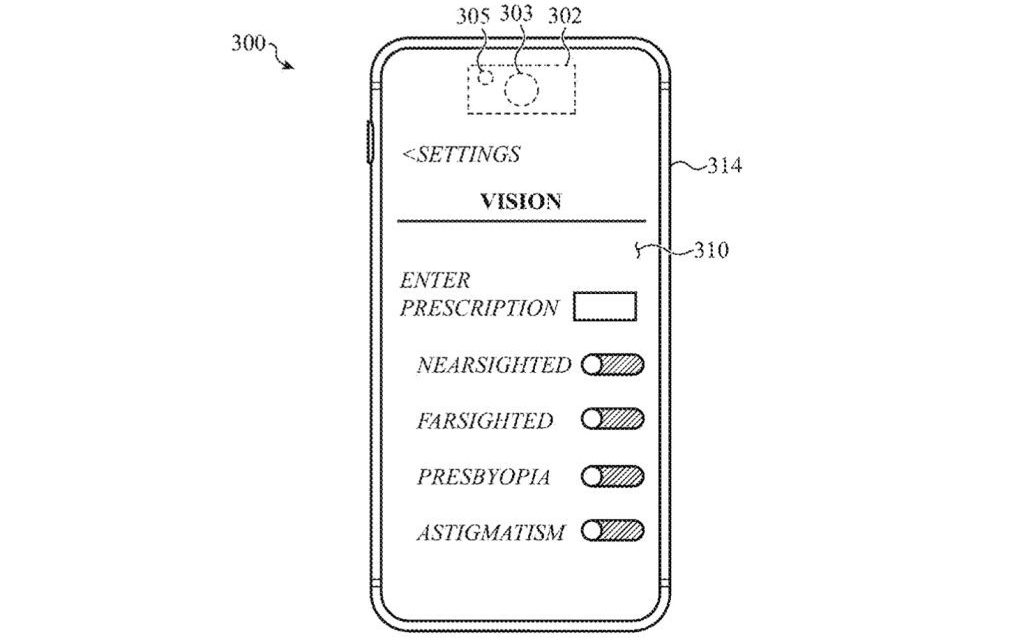

Future iPhones, iPads, and Macs could automatically adjust their screens based on your vision needs. Apple has filed for a patent (number 20220059055) for “systems and methods for switching vision correction graphical outputs on a display of an electronic device.

About the patent filing

Near-sighted? Using stored biometric data, the Apple device would adjust its display for your eyes. Ditto for folks who are far-sighted, have astigmatism, etc. The patent filing could also include eye-glasses that adjust to what you’re viewing (text up close, a computer screen at arm’s length, a football game, etc.) and adjust accordingly. Such eyeglasses could replace those that use progressive lens.

A large percentage of the human population requires prescription eyeglasses or contact lenses in order to see with sufficient clarity. For example, a person with nearsighted vision (myopia) may have difficulty perceiving far away objects. Similarly, a person with farsighted vision (hyperopia) may have difficulty perceiving nearby objects. In order to view an electronic display, a person with a vision deficiency may need to put on or remove prescription eyewear to avoid eye strain and/or to view the electronic display clearly.

In the patent filing, Apple notes that if such a person is unable to easily remove or put on the prescription eyewear, it may be difficult to interact with the electronic display and a user experience with the electronic display may suffer. The tech giant wants to help overcome such issues by offering products that can scan a user’s face and generate a “depth map” and biometric data of their visual needs.

You would be able to change stored data to match your specific eyeglasses or contacts prescription if you wish. And, of course, such technology would also bring Face ID to the Mac.

Summary of the patent filing

Here’s Apple’s abstract of the patent filing with all the tech details: “A method of providing a graphical output may include scanning at least a portion of a user’s face using a sensor; generating a depth map using the scan; and determining a similarity score between the depth map and a set of stored biometric identity maps that are associated with a registered user.

“In response to the similarity score exceeding a threshold, the user may be authenticated as the registered user. The method may further determine a corrective eyewear scenario, select a display profile that is associated with the corrective eyewear scenario, and generate a graphical output in accordance with the selected display profile.”

Article provided with permission from AppleWorld.Today