In December Apple said it was killing an effort to design a privacy-preserving iCloud photo-scanning tool for detecting child sexual abuse material (CSAM) on the platform.

This week, a new child safety group known as Heat Initiative told Apple that it is organizing a campaign to demand that the company “detect, report, and remove” child sexual abuse material from iCloud and offer more tools for users to report CSAM to the company. (I’ve tried unsuccessfully to find a website for Heat Initiative.)

In August 2021 Apple announced a plan to scan photos users stored in iCloud for child sexual abuse material (CSAM). It was to designed detect child sexual abuse images on iPhones and iPads.

However, the plan was widely criticized and in December said that in response to the feedback and guidance it received, the CSAM detection tool for iCloud photos is dead.

“After extensive consultation with experts to gather feedback on child protection initiatives we proposed last year, we are deepening our investment in the Communication Safety feature that we first made available in December 2021,” the company told WIRED in a statement. “We have further decided to not move forward with our previously proposed CSAM detection tool for iCloud Photos. Children can be protected without companies combing through personal data, and we will continue working with governments, child advocates, and other companies to help protect young people, preserve their right to privacy, and make the internet a safer place for children and for us all.”

On August 6, 2021, Apple previewed new child safety features coming to its various devices that were due to arrive later that year.

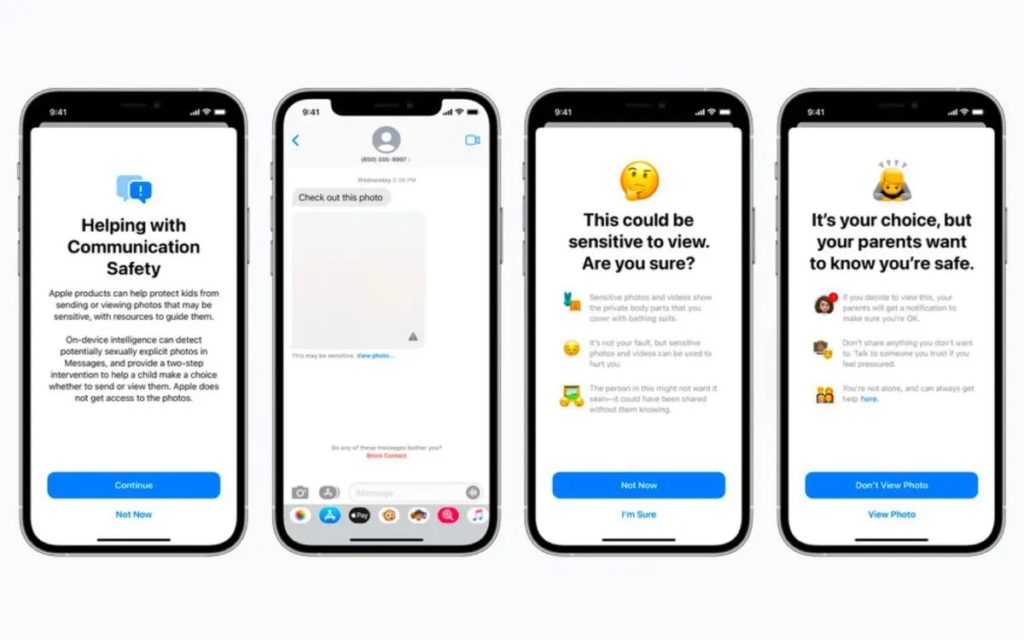

Here’s the gist of Apple’s announcement at the time: Apple is introducing new child safety features in three areas, developed in collaboration with child safety experts. First, new communication tools will enable parents to play a more informed role in helping their children navigate communication online. The Messages app will use on-device machine learning to warn about sensitive content, while keeping private communications unreadable by Apple.

Next, iOS and iPadOS will use new applications of cryptography to help limit the spread of CSAM online, while designing for user privacy. CSAM detection will help Apple provide valuable information to law enforcement on collections of CSAM in iCloud Photos.

Finally, updates to Siri and Search provide parents and children expanded information and help if they encounter unsafe situations. Siri and Search will also intervene when users try to search for CSAM-related topics.

However, following widespread criticism of the plan, in a September 3 statement to 9to5Mac, Apple said it was delaying the CSAM detection system and child safety features.

Here’s Apple’s statement from September 2021: Last month we announced plans for features intended to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material. Based on feedback from customers, advocacy groups, researchers and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features.

Apple has responded to Heat Initiative, outlining its reasons for abandoning the development of its iCloud CSAM scanning feature and instead focusing on a set of on-device tools and resources for users known collectively as Communication Safety features. The company’s response to Heat Initiative, which Apple shared with WIRED offers a look not just at its rationale for pivoting to Communication Safety, but at its broader views on creating mechanisms to circumvent user privacy protections, such as encryption, to monitor data.

“Child sexual abuse material is abhorrent and we are committed to breaking the chain of coercion and influence that makes children susceptible to it,” Erik Neuenschwander, Apple’s director of user privacy and child safety, wrote to WIRED. He added, though, that after collaborating with an array of privacy and security researchers, digital rights groups, and child safety advocates, the company concluded that it could not proceed with development of a CSAM-scanning mechanism, even one built specifically to preserve privacy.

“Scanning every user’s privately stored iCloud data would create new threat vectors for data thieves to find and exploit,” Neuenschwander wrote. “It would also inject the potential for a slippery slope of unintended consequences. Scanning for one type of content, for instance, opens the door for bulk surveillance and could create a desire to search other encrypted messaging systems across content types.”

Article provided with permission from AppleWorld.Today