Apple has been granted a patent (number 11,348,299) for “online modeling for real-time facial animation.” It involves the company’s and Animoji and Memoji.

Animojis allow a user to choose an avatar (e.g., a puppet) to represent themselves. The Animoji can move and talk as if it were a video of the user. Animojis enable users to create personalized versions of emojis in a fun and creative way, and Memoji is the name used for Apple’s personalized “Animoji” characters that can be created and customized right within Messages by choosing from a set of inclusive and diverse characteristics to form a unique personality.

About the patent

In the patent, Apple notes that recent advances in real-time performance capture have brought within reach a new form of human communication. Capturing dynamic facial expressions of users and re-targeting these facial expressions on digital characters enables a communication using virtual avatars with live feedback.

However, Apple says that state of the art marker-based systems, multi-camera capture devices, or intrusive scanners commonly used in high-end animation productions aren’t suitable for consumer-level applications. What’s more, state of the art approaches also require an a prior creation of a tracking model and extensive training which requires the building of an accurate three-dimensional (3D) expression model of the user by scanning and processing a predefined set of facial expressions.

Beyond being time consuming, such pre-processing is also erroneous. Users are typically asked to move their head in front of a sensor in specific static poses to accumulate sufficient information. However, assuming and maintaining a correct pose (e.g., keeping the mouth open in a specific, predefined opening angle) may be exhaustive and difficult and often requires multiple tries. Furthermore, manual corrections and parameter tuning is required to achieve satisfactory tracking results. For these reasons, Apple says that user-specific calibration “is a severe impediment for deployment in consumer-level applications.”

The tech giant wants to make the animation of digital characters based on facial performance capture as simple as possible. Apple wants end users to be able to do such animations without having to deal with system require specialized hardware set-ups that need extensive and careful calibration.

Summary of the patent

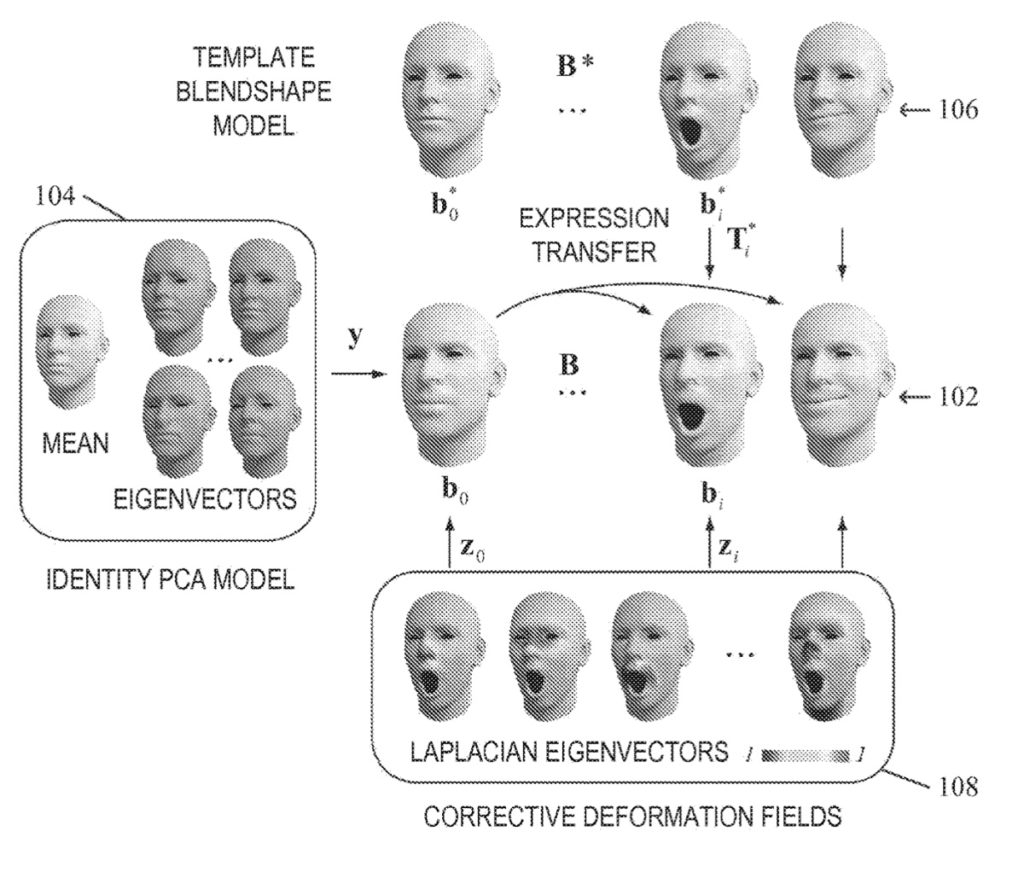

Here’s Apple’s abstract of the patent: “Embodiments relate to a method for real-time facial animation, and a processing device for real-time facial animation. The method includes providing a dynamic expression model, receiving tracking data corresponding to a facial expression of a user, estimating tracking parameters based on the dynamic expression model and the tracking data, and refining the dynamic expression model based on the tracking data and estimated tracking parameters. The method may further include generating a graphical representation corresponding to the facial expression of the user based on the tracking parameters. Embodiments pertain to a real-time facial animation system.”

Article provided with permission from AppleWorld.Today