Apple has been granted a patent (number 11,093,752) for “object tracking in multi-view video.” It involves the rumored “Apple Glasses,” an augmented reality/virtual reality head-mounted display (HMD).

Background of the patent

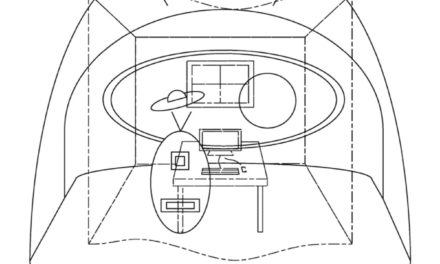

Some modern imaging applications capture image data from multiple directions about a reference point. Some cameras pivot during image capture, which allows a camera to capture image data across an angular sweep that expands the camera’s effective field of view.

Some other cameras have multiple imaging systems that capture image data in several different fields of view. In either case, an aggregate image may be created that represents a merger or “stitching” of image data captured from these multiple views.

In the patent data, Apple says that, often, the multi-view video isn’t displayed in its entirety. Instead, users often control display operation to select a desired portion of the multi-view image that is to be rendered. For example, when rendering an image that represents a 360.degree. view about a reference point, a user might enter commands that cause rendering to appear as if it rotates throughout the 360.degree. space, from which the user perceives that he is exploring the 360.degree. image space.

Apple says that, while such controls provide intuitive ways for an operator to view a static image, it can be cumbersome when an operator views multi-view video data, where content elements can move, often in inconsistent directions. An operator is forced to enter controls continuously to watch an element of the video that draws his interest, which can become frustrating when the operator would prefer simply to observe desired content. Apple wants its Apple Glasses to render controls for multi-view video that don’t require operator interaction.

Summary of the patent

Here’s Apple’s abstract of the patent: “Techniques are disclosed for managing display of content from multi-view video data. According to these techniques, an object may be identified from content of the multi-view video. The object’s location may be tracked across a sequence of multi-view video. The technique may extract a sub-set of video that is contained within a view window that is shifted in an image space of the multi-view video in correspondence to the tracked object’s location. These techniques may be implemented either in an image source device or an image sink device.”

About Apple Glasses

When it comes to Apple Glasses, such a device will arrive in 2022 or 2023 depending on which rumor you believe. It will be a head-mounted display. Or may have a design like “normal” glasses. Or it may be eventually be available in both. The Apple Glasses may or may not have to be tethered to an iPhone to work. Other rumors say that Apple Glasses could have a custom-build Apple chip and a dedicated operating system dubbed “rOS” for “reality operating system.”

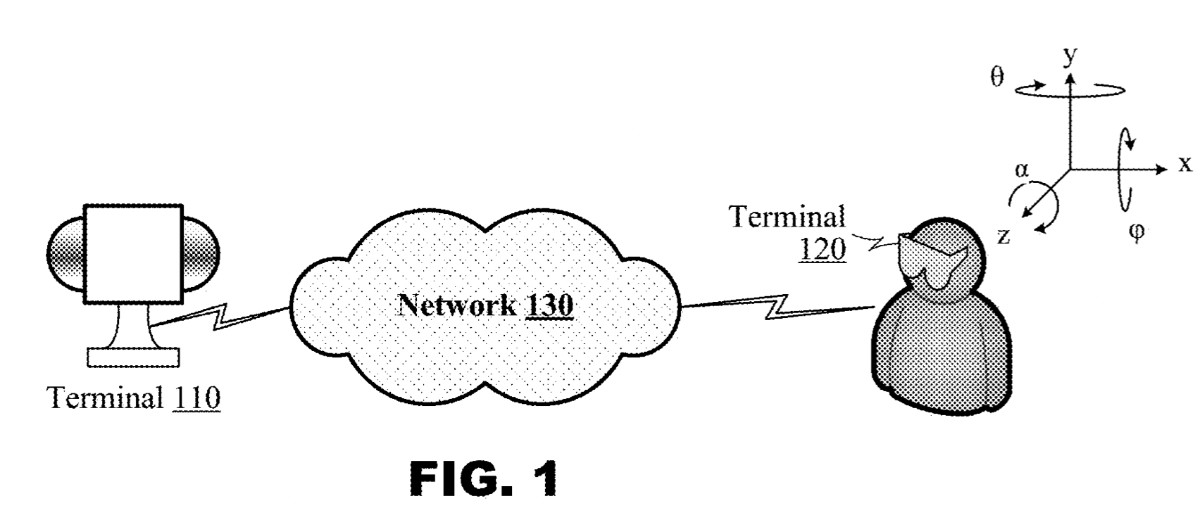

FIG. 1 illustrates a “object tracking in multi-view video” with “Apple Glasses.”

Article provided with permission from AppleWorld.Today