Apple has been granted a patent (number 7924271) by the US Patent & Trademark Office for detecting gestures on multi-event sensitive devices. The method can include detecting gestures on or above a multi-event sensor panel and performing an action associated with detected gestures.

Such action can include activating or changing a state of one or more GUI [graphical user interface] objects and emulate functions performed by a mouse or trackball input device. The inventors are Greg Christie and Wayne Carl Westerman.

Here’s Apple’s background and summary of the invention: “There exist today many styles of input devices for performing operations in a computer system. The operations generally correspond to moving a cursor and making selections on a display screen. The operations can also include paging, scrolling, panning, zooming, etc. By way of example, the input devices can include buttons, switches, keyboards, mice, trackballs, touch pads, joy sticks, touch screens and the like. Each of these devices has advantages and disadvantages that are taken into account when designing a computer system.

“Buttons and switches are generally mechanical in nature and provide limited control with regards to the movement of the cursor and making selections. For example, they can be generally dedicated to moving the cursor in a specific direction (e.g., arrow keys) or to making specific selections (e.g., enter, delete, number, etc.).

“In using a mouse instrument, the movement of the input pointer on a display generally corresponds to the relative movements of the mouse as the user moves the mouse along a surface. In using a trackball instrument, the movement of the input pointer on the display generally corresponds to the relative movements of a trackball as the user moves the ball within a housing. Mouse and trackball instruments typically also can include one or more buttons for making selections. A mouse instrument can also include scroll wheels that allow a user to scroll the displayed content by rolling the wheel forward or backward.

“With touch pad instruments such as touch pads on a personal laptop computer, the movement of the input pointer on a display generally corresponds to the relative movements of the user’s finger (or stylus) as the finger is moved along a surface of the touch pad. Touch screens, on the other hand, are a type of display screen that typically can include a touch-sensitive transparent panel (or ‘skin’) that can overlay the display screen. When using a touch screen, a user typically makes a selection on the display screen by pointing directly to objects (such as GUI objects) displayed on the screen (usually with a stylus or finger).

“To provide additional functionality, hand gestures have been implemented with some of these input devices. By way of example, in touch pads, selections can be made when one or more taps are detected on the surface of the touch pad. In some cases, any portion of the touch pad can be tapped, and in other cases a dedicated portion of the touch pad can be tapped. In addition to selections, scrolling can be initiated by using finger motion at the edge of the touch pad.

“U.S. Pat. Nos. 5,612,719 and 5,590,219, assigned to Apple Computer, Inc. describe some other uses of gesturing. U.S. Pat. No. 5,612,719 discloses an onscreen button that is responsive to at least two different button gestures made on the screen on or near the button. U.S. Pat. No. 5,590,219 discloses a method for recognizing an ellipse-type gesture input on a display screen of a computer system.

“In recent times, more advanced gestures have been implemented. For example, scrolling can be initiated by placing four fingers on the touch pad so that the scrolling gesture is recognized and thereafter moving these fingers on the touch pad to perform scrolling events. The methods for implementing these advanced gestures, however, can be limited and in many instances counter intuitive. In certain applications, especially applications involving managing or editing media files using a computer system, hand gestures using touch screens can allow a user to more efficiently and accurately effect intended operations.

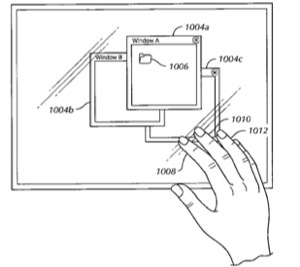

“This relates to detecting gestures with event sensitive devices (such as a touch/proximity sensitive display) for effecting commands on a computer system. Specifically, gestural inputs of a human hand over a touch/proximity sensitive device can be used to control and manipulate graphical user interface objects, such as opening, moving and viewing graphical user interface objects. Gestural inputs over an event sensitive computer desktop application display can be used to effect conventional mouse/trackball actions, such as target, select, right click action, scrolling, etc. Gestural inputs can also invoke the activation of an UI element, after which gestural interactions with the invoked UI element can effect further functions.”

— Dennis Sellers